The ideal AI interface is probably not a chatbot

In my last post (spoilers!), I wrote:

Your [do-what-I-want] DWIW API invocation should be shorter and more precise than describing what you want done in natural language. Can you do it?

Digital Seams: “The ideal API is not RESTful, gRPC, or GraphQL”

Notice the implication here for chat-based interfaces: if all you have is a textbox to talk to your AI chatbot, it’s impossible to use it in a do-what-I-want way. If the provider really had a reliable and precise way to do something, they would just let you write the one-liner that perfectly meets your needs; no need for chit-chat.

There are three common kinds of conversational interfaces:

This is just a menu

I wish this were just a menu

Ya like jazz?

This is just a menu

Have you ever suffered through an automated phone tree?

Thank you for calling Dr. Bob’s office. Please listen carefully as our options have changed. If this is a life-threatening emergency, please hang up and dial 911. Our hours are 10 am to 2 pm, Monday through Thursday. For appointments, press 2. For billing questions, press 3.

This is not an intelligent experience or one that anyone enjoys. This is just a menu that has been linearized into sound so you can interact with it over a phone call. It’s the auditory equivalent of a board that you can point to from your hospital bed; or when the locked-in syndrome patient Jean-Dominique Bauby wrote the entire book The Diving Bell and the Butterfly by blinking when his transcriber reached the right spot in the alphabet.

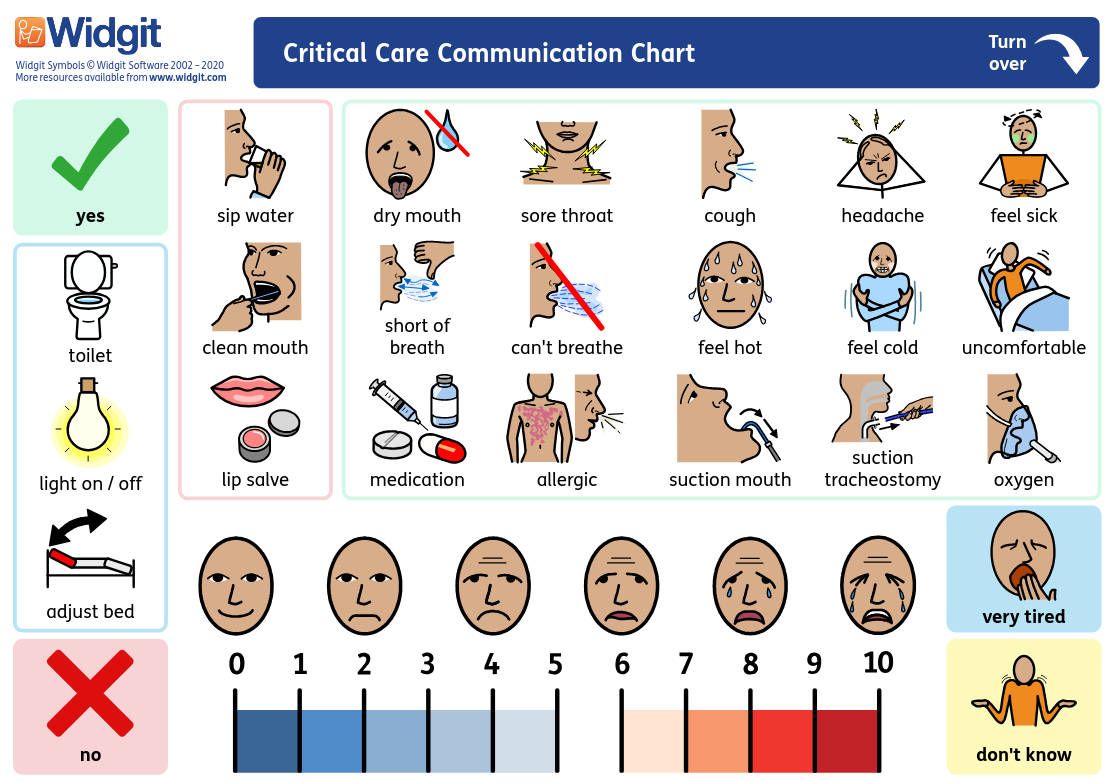

This symbol board from Widgit Health was created to help medical staff to communicate quickly and easily with patients who are critically ill due to Covid-19, even if the patient is being ventilated or has a tracheostomy.

And yet, it often gets the job done. Anyone who needs to talk to Dr. Bob regularly probably has a more direct phone extension, or at least has memorized the phone tree options (they haven’t changed in a decade, despite the “listen carefully” preamble). Everyone else can wait around until their relevant option appears. That option exists, right?

I wish this were just a menu

What about “smarter” options?

Thank you for calling Premier Bank. Tell me about why you’re calling today.

> Uh… direct deposit.

Sorry, I didn’t understand that. Talk some more. For example: “I would like to check my account balance.”

> Uh… I would like to set up direct deposit.

Sorry, I didn’t understand that. Try rephrasing your request as a question. For example: “What are the branch hours in San Francisco?”

> Agent! Representative! Please no more!

This kind of system makes you regret cursing the phone tree menu. It’s an exercise in rephrasing your request in hopes that you’ll stumble on the magic command that the system understands, or (if you’re like me) trying to skip over the robotic humiliation to find a human to talk to. It probably helps cut down on support wait times though, since people will just hang up instead of suffering through it.

In theory this could work well if the system could actually route my requests correctly. But it usually doesn’t. It might also help if I knew every possible option - but then we’re back to the menu, and those associated problems.

Ya like jazz?

The final kind of conversation is the most interesting kind. It’s a true conversation - where you don’t already have an end destination in mind. It’s one where there is a feedback loop between you and your conversation partner; where you each react to the shared context; and you might jointly create something new or learn something unexpected. I think these are the best use cases for chatbots, from ELIZA to ChatGPT.

I actually do like jazz and even play the saxophone in a jazz group sometimes. When we improvise and “trade fours”, we exchange quick improvised phrases in turn, and you’ll hear the same musical idea - a melody, a rhythm, or some other pattern - played and re-played and re-imagined by each member of the band. We’re reacting to each other, and it creates something better.

Remember that you don’t need to acquire a taste for freeform jazz; Ted Gioia remarked in How to Listen to Jazz that almost all jazz you’ll ever hear follows a “theme-and-variations” pattern in which musicians improvise over the harmonies of the song. There is a structure that guides the madness. Similarly, I think purpose-specific LLM applications like Perplexity or Elicit deliver better user experiences than general-purpose ones like ChatGPT; the limitations make the tool better, because it provides an expected structure.

Implications for chatbots and AI

A good chatbot can’t be replaced by a menu or form. That implies there’s a feedback loop between the user and the chatbot.

Why would we need that feedback? Drew Breunig’s distinction between “Interns” and “Cogs” helps a lot:

Interns: Supervised copilots that collaborate with experts, focusing on grunt work.

Cogs: Functions optimized to perform a single task extremely well, usually as part of a pipeline or interface.

Drew Breunig: “The 3 AI Use Cases: Gods, Interns, and Cogs”

This post (worth the full read, by the way) led me to complete my understanding so I could properly complain about chatbots.

The most annoying chatbots are the ones doing Cog work - I just want to spin this Cog in a reliable way so I can talk to my doctor or whatever. I really don’t care if it’s quote-unquote AI at all, whether it’s a 70-billion-parameter LLM, some kind of neural net, or even a bunch of if-statements under the hood. The chat format isn’t necessary.

The best chatbots are Interns; they might be wrong, and I’m going to need to spot-check their work to make sure it’s what I actually wanted. Or better yet! I might be wrong, and they can help me realize my mistake.