Skip the A/B test

I used to feel guilty about not running A/B tests, but not anymore.

At my first job, I worked on internal tools for managing cloud infrastructure. They were used regularly, but infrequently - it would’ve taken months-to-never to get enough data for an A/B test. Ah, maybe someday I’d be able to run experiments like they do at BigTechCo, calculate statistical significance, and make data-driven decisions. But instead, I had to go schlep around different Slack channels and talk to our users 1-on-1.

—

From the outside view, the A/B test is the ultimate way to let the rubber hit the road: why not ship it and let real users reveal their preferences? In the words of Adam Mastroianni:

Just run some experiments, get some numbers, do a few tests! A good word for this is naive research. If you’re asking questions like “Do people look more attractive when they part their hair on the right vs. the left?” or “Does thinking about networking make people want soap?” or “Are people less likely to steal from the communal milk if you print out a picture of human eyes and hang it up in the break room?” you’re doing naive research.

Adam Mastroianni via Experimental History: New paradigm for psychology just dropped

By the way, his blog post is about psychology research, not tech companies or A/B testing - but this next passage really sparked the connection for me:

You can do naive research forever without making any progress. If you’re trying to figure out how cars work, for instance, you can be like, “Does the car still work if we paint it blue?” *checks* “Okay, does the car still work if we… paint it a slightly lighter shade of blue??”

Adam Mastroianni via Experimental History: New paradigm for psychology just dropped

Sounds familiar…

A slightly lighter shade of blue

Does the car still work if we paint it a slightly lighter shade of blue? How about the toolbar on the website? In 2009, Marissa Mayer famously asked her team to run A/B tests between 41 different shades of blue for a toolbar on the Google website. (The company’s first visual designer Douglas Bowman left later that year, citing the blue incident in his goodbye blog post.)

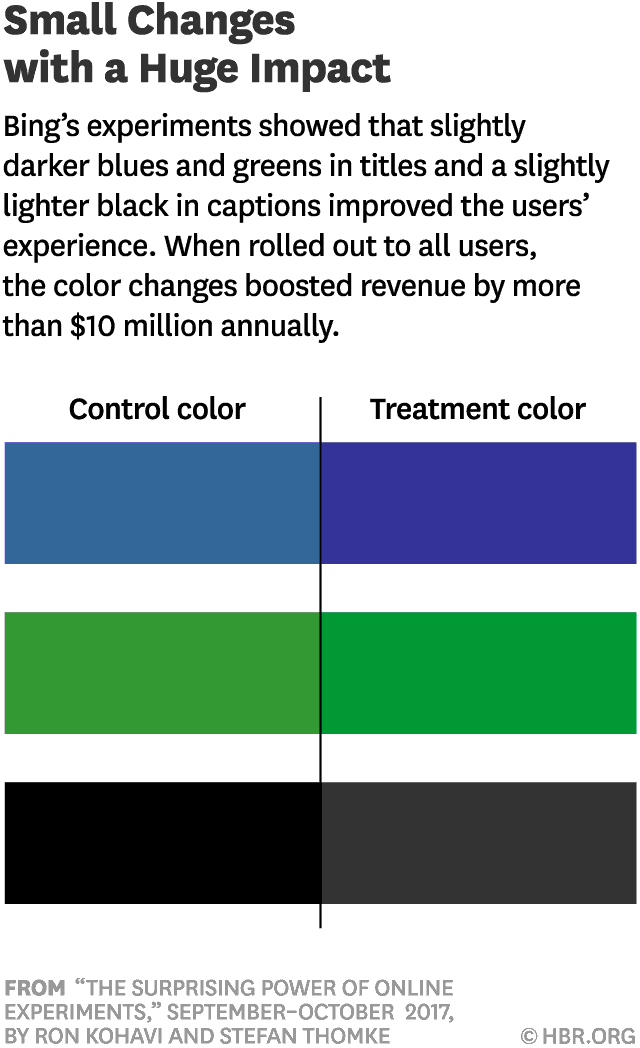

And this isn’t just a case of executive whims - at the scale of the big consumer tech giants, it does make a difference. As Harvard Business Review reported in 2017, Microsoft Bing improved revenue by over $10 million annually by tweaking colors on their site:

These tests involve hundreds of thousands or millions of users, and that’s only a fraction of all users of Bing and Google. At that scale, (tiny percentage) * (large base number) adds up to real money. As Dan Luu has written, companies of a certain scale can pay the salaries of in-house expertise forever off of a single 0.5% improvement.

But can you?

Optimizing versus understanding

I’d say that most times A/B tests are suggested, they’re overkill. They’re trying to optimize even before we fully understand the situation.

Working at a start-up, I don’t have access to the well-understood money printers of big tech. I don’t have access to one million users, especially working on B2B products. And most importantly, I don’t have the time to waste on naive research.

I need to learn about our customers, how they get value from our product, and how we can help them. A few interviews or other qualitative research will get me significantly farther than an A/B test - and build the understanding of the engine that drives my business, instead of the paint job on top. And when I don’t have infinite resources or runway, prioritizing the highest order bit means ignoring the 0.5% optimizations along the way.

For faster insights, I can talk to users. I can build out mockups and run live usability tests. I can ship changes and roll them back (the “two-way door” approach), and even roll out the change gradually if it’s a risky one. To blindly A/B test is to abdicate responsibility.

There might be good opportunities for A/B testing - consider Tal Raviv’s Please, Please Don’t A/B Test That for a checklist on when - but that means there are also lots of situations where you can do better.

How can we decide when to run an A/B test? Easy, just flip a coin! Heads: A/B test. Tails: something else. Then, to make sure the results are statistically significant…